Blogs

Catastrophe Modeling: Framework, Methodology, and Applications in Insurance Risk Management

Catastrophe Modeling: Framework, Methodology, and Applications in Insurance Risk Management

Struggling to accurately assess risks of catastrophes on your insurance portfolio? Is navigating through manual risk assessment processes preventing timely decisions? Wondering how to use data for better capital allocation to minimize potential losses and improve growth?

The modern-day hazard landscape is filled with new uncertainties and external shocks that outdated risk assessment methods cannot handle. Advanced risk assessment and tailored mitigation strategies are, therefore, in high demand.

This is where Catastrophe Modeling has emerged as a valuable tool for underwriters and claims experts in the US which is subject to a variety of natural disasters – hurricanes, earthquakes, floods, windstorms, and wildfires to name a few. It augments actuarial models with the powers of science-based knowledge, sophisticated analytics and software to quickly understand and forecast how an extreme event could influence their insurance portfolio.

Advanced analytics like these form the foundation of modern Insurance Analytics Solutions, enabling insurers to enhance visibility, predictability, and decision accuracy across catastrophe risks.

Climate-related and environmental risks are just one of the many risks that insurance and reinsurance companies have endured over the years. Their growing frequency and ferocity of impact have driven up the demand for insurance coverage. In fact, the catastrophe insurance market reached USD 220.30 billion in 2025 and is expected to continue at 4.5%CAGR until 2030.

Property and casualty (P&C) insurers, in particular, are under additional pressure from investors and regulatory authorities to be fully aware of natural hazard risk exposures in their portfolios and ready to tackle unforeseen, irregular climate patterns. Life/health insurance carriers must factor in the adverse health impact of extreme environmental and weather conditions while analyzing possible losses.

In the aftermath of catastrophic events, both insurers and insured face potential financial and nonfinancial devastation – unless they are well prepared. The priorities are clear – rein in costs and dynamic risks. At the same time, they must watch out for opportunities to maintain profitable growth. For example, modified tariff structures in 2025 forced insurers/reinsurers to rework cost structures, increase premiums and adopt other risk mitigation measures.

These pressing needs have compelled key decision-makers to revisit portfolio strategy and aim for improving resilience to absorb shocks better.

Traditional methods relying on historical data are ill-equipped to fully assess the volatility associated with complex disaster scenarios and market conditions. Moreover, in this information age, insurers are under pressure to make better use of the data available.

Hence many have turned to Catastrophe Modelling to strengthen their risk management arsenal and protect profit potential. It offers a more nuanced and proactive approach to help uncover more possible liability areas – one that considers comprehensive and varied information sources concerning climate change, rapid urban development, other man-made risk factors etc. It considers data obtained from sensors, satellite visuals, and social media from specific geographies to evaluate risk factors with higher precision. Armed with this intelligence, they can plan capital resources and cut down losses more effectively.

In short, it helps insurance carriers to: prevent what can be predicted; prepare for what cannot be predicted.

Catastrophe Modeling components used by insurers and reinsurers typically include:

Assesses the probability and severity of catastrophic events using a combination of historical data, scientific research, and statistical modeling.

Calculates the possible damage on properties and assets taking into consideration, parameters like construction material, building codes, retrofitting measures etc.

Provides data relating to properties, populations, infrastructure, and economic activities to help gauge possible extent of risk due to a hazardous event on insured portfolios.

Outcomes of these activities include:

While risk officers have ditched traditional methods in favour of Catastrophe Modeling, certain challenges persist that can weaken their results. These require the expertise and experience of companies like DPA who blend key technology and data science capabilities with deep knowledge of the insurance sector challenges.

For each challenge listed here, we also map it to solutions that DPA offers.

Take for instance, geocoding which is crucial for rating an insurance policy. This vital tool is used for pin-point accuracy in risk assessment by identifying the exact geographical location of every insured property/asset. Importantly it converts and standardizes addresses and locations into machine-readable formats, making it easier for underwriting teams to process and quickly gain insights using advanced tools.

Particularly, in present circumstances of climate change, the need for accurate and graded geographic data has intensified. It allows underwriters and risk assessors to gain deep, specific and granular information on hazardous conditions of that geographic section. Experts believe that catastrophe models help carriers arrive at more advantageous premiums and mitigate pricing risks when they mark out narrower territories.

However, some insurers still using manual geocoding methods struggle due to slow and error-prone processing, which delays the technical pricing and underwriting.

To address this challenge, DPA provides advanced geocoding tools that help cleanse and accurately map location data within minutes, significantly improving accuracy and reducing turnaround time from days to minutes.

In a data-intensive sector like insurance, data can be both an asset and a liability – the quality of data is the decisive factor here. Companies have realized this and budgeted big dollars to digitally transform and modernize crucial yet complex processes like underwriting, claims processing, risk assessment, regulatory compliance. However, these processes will become efficient only if the data they run on is accurate, comprehensive, consistent, and dependable.

In other words, the effectiveness of Catastrophe Modeling outcomes is heavily dependent on data quality. The insurer’s investments on these advanced tools will amount to nothing if data quality is poor as the output is unreliable. In fact, poor data quality can dilute decisions, consistency of reports, mistakes in pricing strategy among other issues.

DPA’s solutions help assure data quality through rigorous processes to clean and validate all data, in turn, raising confidence levels in the final outputs.

Insurance companies continue to face many first-time sources of unpredictability.

Consider cyber insurance which initially offered competitive pricing avenues to possible revenue and market share improvements. However, over time, the cyberattack environment has intensified in an increasingly digital world – remote/hybrid working conditions, wider threat surface and vulnerabilities including third-party vendor networks, more sophisticated threat actors etc.

This heightened volatility has led to more cyber insurance claims that could cause more frequent and bigger liabilities than ever that insurers may fail to properly anticipate or incorporate within their pricing. In fact, even sector experts may not fully appreciate today’s dynamic risks and cyber coverage demands. Traditionally, many relied on data given by their clients which often meant their decisions were driven by insufficient, outdated or biased insights. Moreover, cyber underwriting has traditionally revolved around manual processes and historical data.

Underwriting teams must revisit portfolios for adjusting premiums as well as underwriting risks with better understanding and minimizing exposure in the modern threat landscape.

Both insurers and reinsurers need pricing systems with short-term and long-term strategies. Otherwise they may not be able to make timely and appropriate decisions and adversely affect profitability and resiliency.

Those still using outdated models and pricing are under greater risk of under-pricing.

Leveraging our expertise in technical pricing and detailed assumptions, we enable access to the most current models and market data, which leads to accurate and competitive pricing by our clients.

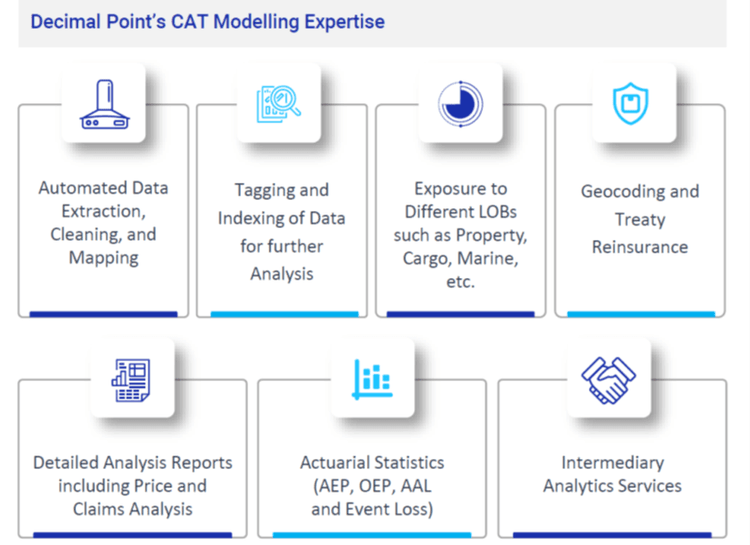

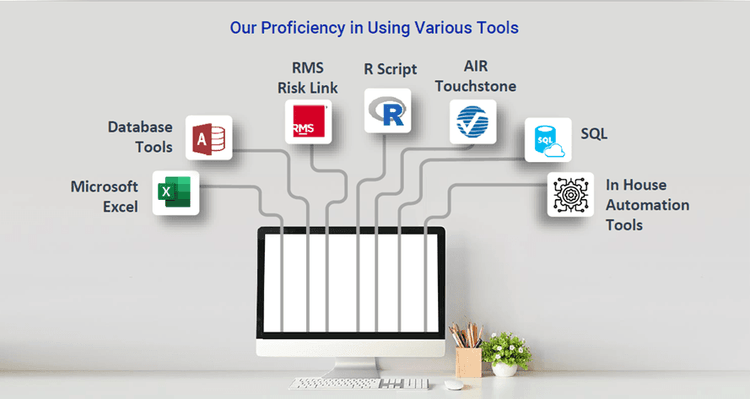

Catastrophe Modeling thrives on collecting data and predicting outcomes from it. Decimal Point Analytics continues to lead innovation efforts in the application of technology to solve complex problems. Our expertise in the Data Management and Finance domains enables us to analyze and interpret complex data sets for specific geographies to perceive risks and recalibrate strategies accordingly.

Empower your insurance operations with AI-driven catastrophe modeling, accurate data, and intelligent pricing.

Connect with Decimal Point Analytics to explore customized analytics solutions for risk mitigation and profitability.